AI is breaking traditional SaaS unit economics

In the last two years, I've reviewed quite a few AI-heavy SaaS companies ("AI Wrappers"). The pattern is consistent: impressive growth rates paired with shockingly low gross margins.

Traditional SaaS companies I evaluate typically show 75-90% gross margins. The AI-heavy ones? Essentially reselling (fine-tuned) LLM output at 50-60%.

Some that I saw were dangerously close to negative unit economics. Seat-based pricing with no usage limits could bring the cost of acquiring and serving customers to levels that can’t be covered by revenue collected over their lifetime.

Happens to the best - even pricier flat-rate subscriptions don't necessarily prevent this.

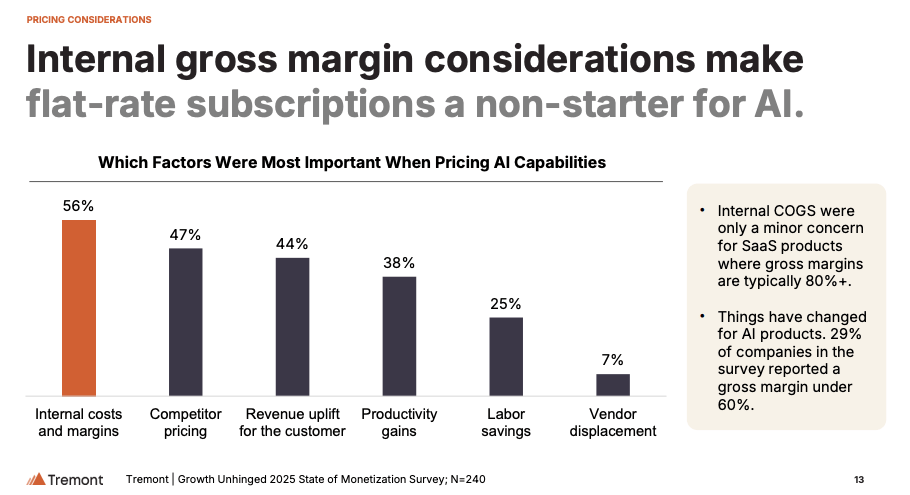

Tremont's data backs up what I'm seeing: 29% of companies who took their survey report gross margins below 60%. If you're struggling to cover costs of delivering AI capabilities with your current pricing, their report gives some inspiration on how to fix this.

Source: Tremont

From experience, most SaaS founders aren't super scientific about pricing (especially bootstrappers). But if you're offering a product with AI at its core, the pressure to become obsessed with pricing and understand unit economics is rising fast.

Not only for founders, but also for your product team / engineers.

Why? Because AI features introduce a significant variable cost to each user interaction. Each query to a large language model incurs expense in computing power or API fees.

Think you can just use an open-source LLM to save costs? This analysis shows it's more complicated than that.

Companies usually let engineering teams pick tech stacks purely on technical merit - and engineers have a tendency to go for the new shiny tech instead of the "good enough" solution (you can’t blame them!).

In traditional SaaS, this was totally fine. Engineering decisions rarely impacted unit economics. Build a new feature, serve it to 1,000 users or 100,000 - product delivery costs stayed roughly the same.

With AI coming into play, there's now a big risk that your COGS go through the roof and you'll discover unit economics don't work.

What's Different Now

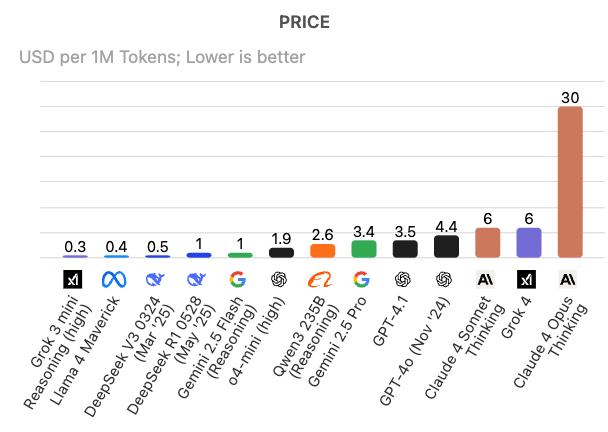

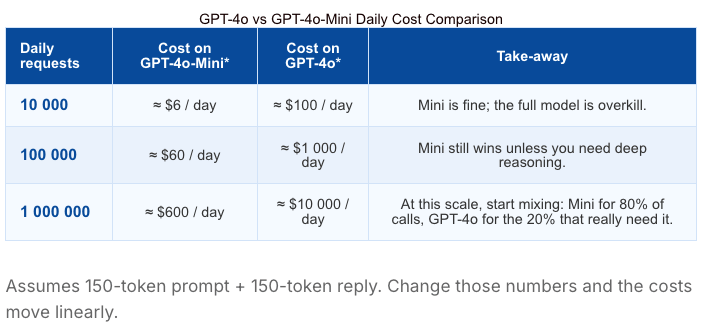

LLM API calls cost real money that scales with customers/usage

Gross margins highly depend on architecture choices

Model selection = pricing strategy decision

Source: ptolemay

The engineer's role now extends beyond creating functional systems - it's also about picking a cost-efficient tech stack that sustains business profitability.

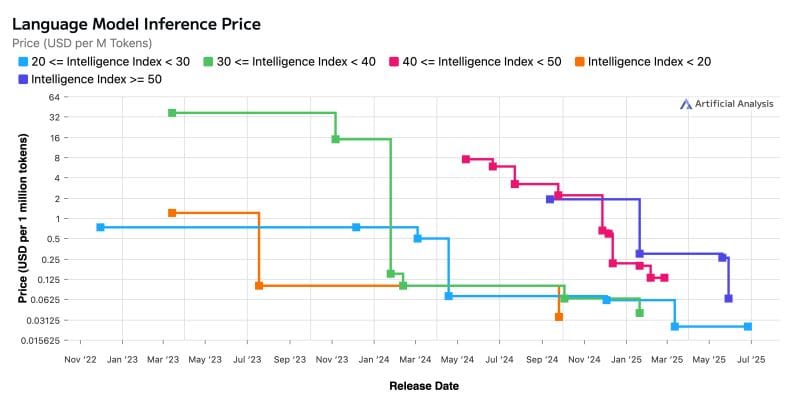

Also, given how fast performance and pricing evolve, architecture should be designed to avoid vendor lock-in. What's cost-effective today might be expensive tomorrow, and vice versa.

Not sure if this means you should let your R&D department sit in pricing discussions or involve your finance team in LLM selection.

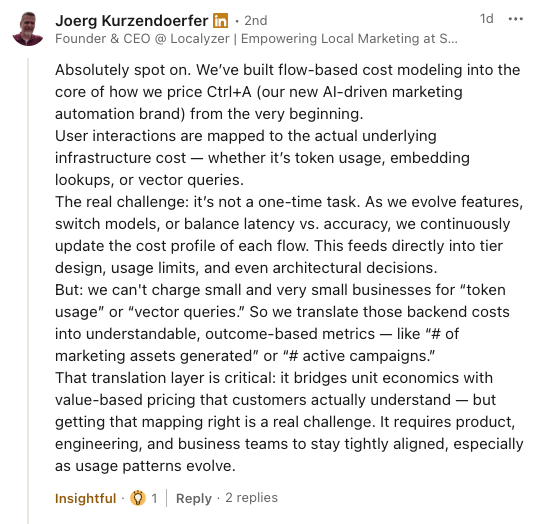

But if you get this right, smart infrastructure choices could give you a massive competitive advantage. This example from a founder who commented on one of my posts shows what a thoughtful approach could look like in practice:

I also found this article which shows some practical tips on how to bring down your LLM bill.

The Bottom Line

Even as prices continue dropping, the fundamental shift remains: engineering decisions now directly impact unit economics in ways they never did before.

Your CTO and CFO might need to become best friends.

The companies that figure this out early could offer generous free tiers while competitors burn cash, and undercut rivals on pricing because of smarter infrastructure choices.

The question isn't whether AI costs will stabilize. It's whether you and your team understand the economics behind every technical decision they make.

Key takeaway: In AI-heavy SaaS, there's no such thing as a purely technical decision anymore. Every model choice, every architecture decision, every API call is also a business decision that affects your ability to scale profitably.